AI Course Policies and Assignment Guidelines

Before writing a policy about AI, understand how students will likely use AI tools for your course. Spend some time engaging with AI to see how it can be used productively, or not, with the assignments you intend to give your students. You may find this “play” will lead to assignment revision and help you develop a useful approach to guiding students on appropriate and inappropriate uses of AI. Very likely, you will find that your guidance and policies will need to be catered to different types of assignments.

Surveys suggest that students are more engaged with AI than their instructors are. Some statistics from the Spring 2024 Tyton Partners survey and Report, Time for Class 2024, are instructive:

- 59% of students report that they are regular AI users, whereas only 36% of instructors report that they are regular AI users

- 50% of students report they would be extremely likely or likely to use AI tools even if their institution banned their use.

As instructors craft their AI policies, recognizing the prevalence of AI use is crucial. AI output reads as authoritative as well as detailed and comprehensive, and only a person who is informed on the subject that AI output is generated can well assess its accuracy or usefulness. Students new to a discipline who are already using AI to gather information relevant to their daily lives are likely to over-estimate AI’s accuracy and usefulness, and will need careful guidance and education to develop AI critical thinking.

As the next section makes clear, instructors are in control of AI policy in their courses, but these decisions should be made within the context of understanding how prevalent AI use is currently.

The University’s Academic Dishonesty policy includes as an example of dishonest behavior “unauthorized assistance or unauthorized materials from any source (including but not limited to oral, audio, physical, digital, text messages, generative artificial intelligence (AI), photographs, apps, websites, and services) to generate a work product.” The key word here is unauthorized, placing the onus on instructors to decide if and how they wish to authorize such use.

Further, the University’s policy presses students to acknowledge use of AI: “Cite a generative AI tool whenever work created by the tool is paraphrased, quoted, or incorporated into one’s own work product. If generative AI was used to edit one’s own prose, the use of the tool for this function should be acknowledged in the work.”

At the individual course level, you may consider adding a clarifying statement to your syllabus, such as:

- “Use of Artificial Intelligence (AI) to produce or help content without proper attribution or authorization, when an assignment does not explicitly call or allow for it, is plagiarism.”

A few other AI-related things to consider adding to the syllabus:

- information about generative AI’s tendency to hallucinate + clear ground rules about students’ accountability for verifying any AI outputs they consult or reference.

- a notice about using AI ethically and safely. (ChatGPT acknowledges that they may share account holders’ personal information with third parties, including vendors and service providers — see their Privacy Policy. Teach your students to never share personal and sensitive information with generative AI chatbots.)

- a description of under what circumstances students will be permitted or encouraged to use generative AI in your course. By encouraging and actively engaging with use of AI in some circumstances you demonstrate openness and AI expertise, making your admonitions against AI use in other circumstances more compelling.

- information about how students should cite or credit AI.

All courses will not have the same policy. That’s part of what we need to teach students — that faculty are designing courses and assignments with different purposes and directions, and thus careful attention to directions around the appropriate use of AI needs to become part of students’ regular practice.

If you are looking for ideas for syllabus language, Montclair faculty statements are being collected, and Lance Eaton of College Unbound has organized this diverse collection of statements from faculty active in AI discussions.

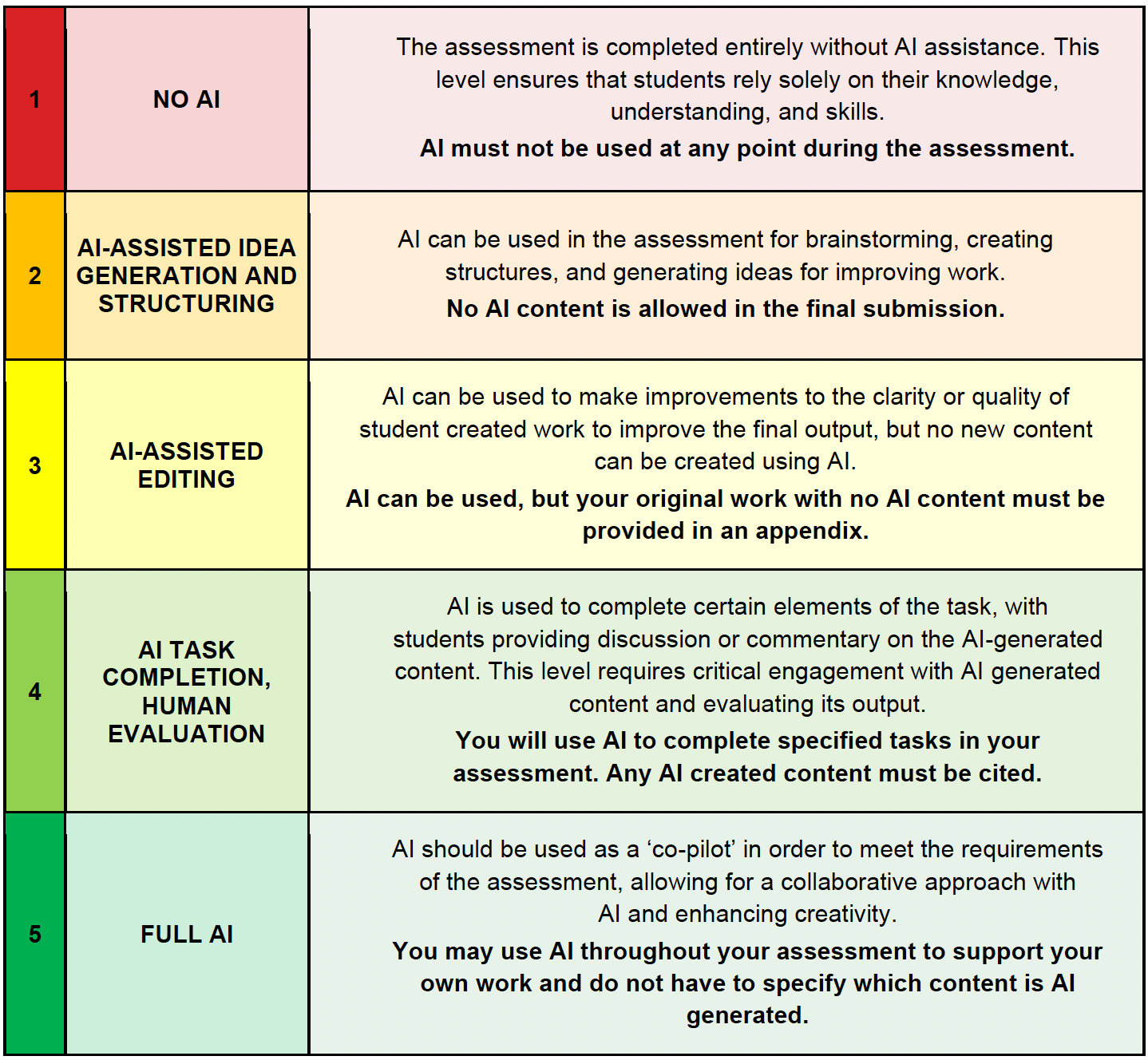

Perkins, Furze, Roe, and MacVaugh (2024) published an AI Assessment Scale that provides a useful framework for communicating acceptable AI usage to students on assignments and assessments:

Last Modified: Monday, September 2, 2024 1:30 pm

EJI

![]()

Teaching Resources by Montclair State University Office for Faculty Excellence is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License

Third-party content is not covered under the Creative Commons license and may be subject to additional intellectual property notices, information, or restrictions. You are solely responsible for obtaining permission to use third-party content or determining whether your use is fair use and for responding to any claims that may arise.